Testing new fitPC2i

Posted on | April 14, 2011 | No Comments

Install Ubuntu Desktop 10.10 on fitPC2i

Note: Initially i planned to use 10.04.2, but the fact that the RT2x00 wireless drivers (needed for RT3070 thats inside the fitPC2i) are in kernel from 2.6.33 and up makes it worth using 10.10. imho.

Version with 1.6 GHz/1GB

HD 160 GB SATA 2,5″

- Insert HD

- Insert bootable USB with Ubuntu

- With 2 microUSB on front panel – you have 4 USB total – nice.

- Installs without problem.

- Wireless: module rt2870sta loads automagically, works fine. http://wiki.debian.org/rt2870sta

- Run full update – all good.

Temperature still seems a bit worrying … metal case is just about too hot to touch.

IPv6 tunnel via gogo6 on Ubuntu 9.10

Posted on | March 30, 2011 | No Comments

The following is a short summary guide on making a ipv6 tunnel via http://gogonet.gogo6.com/ work on Ubuntu 9.10 Karmic Koala, for those of us who are not on 10.10 yet.

It makes no assumptions about whether tunneling instead of going generic IPv6 is a good thing to do, or whether it just makes us become lazy and put up with not having IPv6.

Also, it makes no statement about the quality of gogo6/freenet6 tunnels in comparison to other brokers like

The guide is completely based on this one – which i found just works straight forward. Thanks for sharing:

Get a freenet6 account here:

As root:

# apt-get install gw6c radvd # /etc/init.d/radvd stop # vi /etc/gw6c/gw6c.conf like so: userid=USERID passwd=PASSWORD server=broker.freenet6.net auth_method=any prefixlen=64 template=linux if_tunnel_v6v4=freenet6 if_tunnel_v6udpv4=freenet6 if_prefix=eth0 (CHANGE IF NEEDED) keepalive=yes keepalive_interval=10 host_type=router (CHANGE TO host IF 1 BOX) then do # /etc/init.d/gw6c restart

On first attempt of starting gw6c, i m getting a short re-negotiate of broker:

root@sbut:/home/sebastian/gogoc-1_2-RELEASE# /etc/init.d/gw6c restart * Restarting Gateway6 Client gw6c No /usr/sbin/gw6c found running; none killed. Gateway6 Client v6.0-RELEASE build Sep 7 2009-13:59:46 Built on ///Linux palmer 2.6.24-24-server #1 SMP Wed Apr 15 16:36:01 UTC 2009 i686 GNU/Linux/// ).ceived a TSP redirection message from Gateway6 broker.freenet6.net (1200 Redirection The Gateway6 redirection list is [ sydney.freenet6.net, amsterdam.freenet6.net, montreal.freenet6.net ]. The optimized Gateway6 redirection list is [ amsterdam.freenet6.net, montreal.freenet6.net, sydney.freenet6.net ]. Received data is invalid. Last status context is: TSP authentication. Finished.

After a retry, everything is fine:

root@sbut:/home/sebastian/gogoc-1_2-RELEASE# ping6 2001:470:1f14:b91::2 PING 2001:470:1f14:b91::2(2001:470:1f14:b91::2) 56 data bytes 64 bytes from 2001:470:1f14:b91::2: icmp_seq=1 ttl=57 time=51.8 ms 64 bytes from 2001:470:1f14:b91::2: icmp_seq=2 ttl=57 time=33.4 ms

You might want to put the best gateway, in my case amsterdam.freenet6.net, into the config file above.

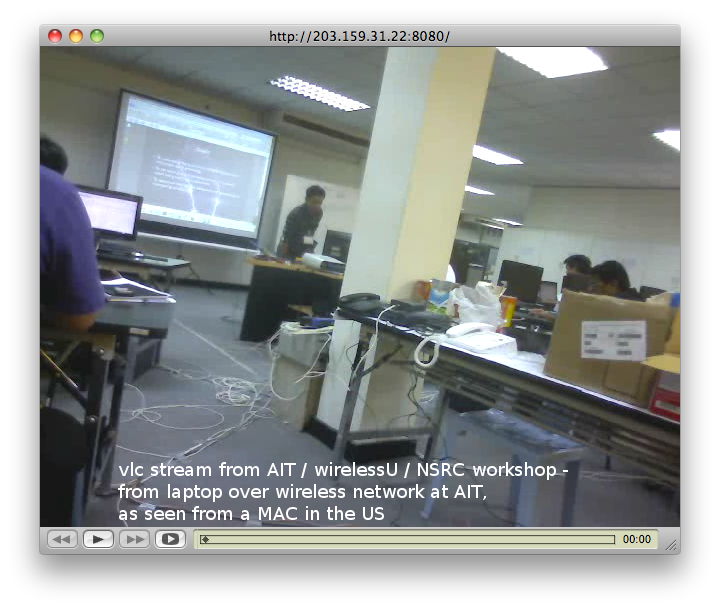

Free low tech video serving

Posted on | February 10, 2011 | No Comments

Notes on open source low cost video streaming

In what follows, we are looking at video streaming solutions for rural networks with limited uplink bandwidths and limited budgets. The requirements are:

- free open source solution

- no budget for additional hardware or network (…)

- low bandwidth for video streams

- absolutely NO hosted solution, no centralized components (in other words – no skype, no youtube, no – not even “your favorite hosted service”)

- streaming live as well as from archive files

Some Open Source streaming options

- VLC

- icecast

- Mediatomb

- Kaltura (hosted, but available as self-hosted system)

- MythTV

- Red5

Read more:

http://klaus.geekserver.net/flash/streaming.html

http://openvideoalliance.org/wiki/index.php?title=List_of_Open_Source_Video_Software

In what follows, we will work with VLC streaming from a standard small laptop.

VLC

Before you begin, Read:

MOST IMPORTANT:

http://wiki.videolan.org/Documentation:Streaming_HowTo

MORE DISCUSSION OF DETAILS

http://forum.videolan.org/viewtopic.php?f=4&t=84990

http://en.flossmanuals.net/TheoraCookbook/VLCEncoding

http://forum.videolan.org/viewtopic.php?t=32562

http://wiki.videolan.org/Documentation:Modules/v4l2

low bandwidth streaming:

http://forum.videolan.org/viewtopic.php?f=4&t=85932

Requirements

Our system is:

Ubuntu Linux 10.04

USB Video cam

Install packages as follows, from command line (or alternatively via synaptics GUI):

# apt-get install vlc vlc-nox vlc-data libvlc-dev libvlc2 libvlccore-dev libvlccore2

(there might be more i forget here … follow dependencies as suggested by package manager)

From here on, it s the linux command line:

Identify webcam by doing

# ls /dev/video*

Plug and unplug until you find it.

In my case, it is

/dev/video0

then try

# vlc v4l2:///dev/video0

you should see the webcam streamed in your local vlc.

If this is working, try streaming and transcoding, with display at the same time:

vlc v4l2:// :v4l-vdev=”/dev/video0″ :v4l-adev=”/dev/audio1″ :v4l-norm=3 :v4l-frequency=-1 :v4l-caching=300 :v4l-chroma=”” :v4l-fps=-1.000000 :v4l-samplerate=44100 :v4l-channel=0 :v4l-tuner=-1 :v4l-audio=-1 :v4l-stereo :v4l-width=640 :v4l-height=480 :v4l-brightness=-1 :v4l-colour=-1 :v4l-hue=-1 :v4l-contrast=-1 :no-v4l-mjpeg :v4l-decimation=1 :v4l-quality=100 –sout “#transcode{vcodec=theo,vb=800,scale=1,acodec=vorb,ab=128,channels=2,samplerate=44100}:display”

This example works for me – note that your device names and detail settings might be different.

Next, try stream it to the network:

In the follwing examples,

my machine is 192.168.0.3

the clients are 192.168.0.x

On a client, you can test your stream from the server by doing

vlc -vvv http://192.168.0.3:8080

or by opening stream via GUI client.

My best result was achieved with the following settings for the server’s stream:

vlc v4l2:// :v4l-vdev=”/dev/video0″ :v4l-adev=”/dev/audio1″ :v4l-norm=3 :v4l-frequency=-1 :v4l-caching=300 :v4l-chroma=”” :v4l-fps=-1.000000 :v4l-samplerate=44100 :v4l-channel=0 :v4l-tuner=-1 :v4l-audio=-1 :v4l-stereo :v4l-width=480 :v4l-height=360 :v4l-brightness=-1 :v4l-colour=-1 :v4l-hue=-1 :v4l-contrast=-1 :no-v4l-mjpeg :v4l-decimation=1 :v4l-quality=100 –sout=”#transcode{vcodec=mp4v,vb=800,fps=12,scale=1.0,acodec=mp3,ab=90,channels=1,samplerate=44100}:standard{access=http,mux=ts,dst=192.168.0.3:8080}”

The one problem with that line was sound – which didnt work yet.

Great help from the videolan forum, thanks! https://forum.videolan.org/viewtopic.php?f=4&t=87529&e=0&sid=424a9bacd39a0d3650777aef87699c7f

“Looking at the input string with the “Show More Options” box checked in the GUI will display your default audio input like…

vlc v4l2:// :input-slave=alsa:// :v4l2-standard=0 ”

That worked for me, so the command becomes:

vlc v4l2:// :input-slave=alsa:// :v4l-vdev=”/dev/video0″ :v4l-norm=3 :v4l-frequency=-1 :v4l-caching=300 :v4l-chroma=”” :v4l-fps=-1.000000 :v4l-samplerate=44100 :v4l-channel=0 :v4l-tuner=-1 :v4l-audio=-1 :v4l-stereo :v4l-width=480 :v4l-height=360 :v4l-brightness=-1 :v4l-colour=-1 :v4l-hue=-1 :v4l-contrast=-1 :no-v4l-mjpeg :v4l-decimation=1 :v4l-quality=100 –sout=”#transcode{vcodec=mp4v,vb=800,fps=12,scale=1.0,acodec=mp3,ab=90,channels=1,samplerate=44100}:standard{access=http,mux=ts,dst=192.168.0.4:8080}”

In order to find the best settings (size, bandwidth, encoding, mux, etc) you have to play with the parameters –

read

http://wiki.videolan.org/Documentation:Streaming_HowTo/Command_Line_Examples

and play with different settings.

how to find pulseaudio device on a Ubuntu 10.04

pactl list | grep -A2 ‘Source #’ | grep ‘Name: ‘ | cut -d” ” -f2

gives me

alsa_input.usb-046d_0992_D09F8DDF-02-U0x46d0x992.analog-mono

Low cost IPv6 gateway on a Linksys router

Posted on | September 30, 2010 | No Comments

Here s a little guide on how to get started with your own IPv6 gateway on a Linksys WRT54GL router – or any other device that can run OpenWRT.

http://itu.dk/pit/index.php?n=Main.IPv6-6to4onOpenWRTRouterBehindNAT

This will enable you to get experienced with IPv6 in your own network, even if the ISPs and organisations around you are not yet ready for it yet.

Mind you, this is not a replacement for true native IPv6, just a little starter.

Also, the guide does not go into all the concepts and reasons for IPv6 – you might want to read up on these, to fully understad what you are doing. Wikipedia is a good start point: http://en.wikipedia.org/wiki/Ipv6

(This little demo setup is at the IT UNiversity of Copenhagen, where we are working with end-to-end IPv6 enabled wireless sensor networks, BLIP and 6LoWPAN.)

Introducing zero, defining bottom?

Posted on | May 25, 2010 | No Comments

Facebook introduces the free service 0.facebook.com in 45 countries:

http://blog.facebook.com/blog.php?post=391295167130

and many authors are discussing its impact on africa* , controversially:

http://whiteafrican.com/2010/05/21/facebook-zero-a-paradigm-shift/

http://blurringborders.com/2010/05/20/will-africans-have-a-say-on-privacy/

http://www.ictworks.org/news/2010/05/24/4-reasons-celebrate-facebook-zero-africa

Not that worried about privacy, as expressed earlier, here: http://form.less.dk/?p=211 –

but thinking that moves like this, game changing as they are,

define and stabilize bottom-of-the-pyramid

as much as they address it.

does the so-called bottom get invited here to use passively, or to build?

how will the line between the two be modified through moves like this?

sure i d love to get surprised, but …

Out to the clouds?

Posted on | May 15, 2010 | No Comments

The following is a collection of considerations and criteria from discussions of cloud computing, cloudsourcing and outsourcing in various organisations,

e.g. universities in several countries, tech startups, NGOs.

While this write-up is inspired by many colleagues in various places and networks (thanks!), the views expressed are my personal ones and not necessarily shared.

It s work-in-progress, or thinking aloud really – all comments are extremely welcome and will be published here.

I will focus these around a question that many organisations are facing these days:

whether to outsource mail, calendar and related intranet service operations, or run those inside one’s own organisation.

To set the context, Google offers free mail and apps to e.g. african educational organizations:

http://www.google.com/intl/en/press/pressrel/apps_rwanda.html

This is often described as “putting things out in the cloud”, however the term “cloud” itself deserves some attention.

Wikipedias take on this,

http://en.wikipedia.org/wiki/Cloud_computing

“Cloud computing is Internet-based computing, whereby shared resources, software and information are provided to computers and other devices on-demand, like the electricity grid.”

is not very helpful. Following this definition, almost everything we do on a computer these days, is actually cloud computing.

I dont want to get into the long discussion of terms, myths and hypes here, however,

it seems important to note that

“real” cloud computing would have to be defined a bit more narrow, and specifying what resources we are talking about – e.g. cpu cycles, storage, software resources, and so forth.

Distributing your backup storage, your 3D rendering jobs or your trust base system among computers on the net – that is what i would call true cloud.

Having your mail and calendar servers run by someone else really is a lot closer to classical outsourcing, and we should discuss it as such.

So, what is the question?

Many universities today are considering whether to hand over operations to players like Google, Microsoft, Amazon, and many others in the marketplace.

Here s some things to consider:

1. Cost

There is no reason to believe that outsourcing as a rule will be more cost effective (in terms of TCO) than operating your own services.

This myth rose sometime in the 90s, and managements around the world then had to learn painfully that it is just that: a myth.

The truth is: it all depends. On how well you run your outsourcing. Sorry if that s not helpful.

2. People

In terms of people resources, managing outsourcing competently (!) may take just as much time resources as internal operations. It doesnt have to, but it may, depending on how you manage.

3. Hassle

Often we hear the argument that running your own services takes way too much time and resources.

Frankly, if it does, then you are not doing it right.

If it is possible for your potential outsourcing partner, located in a high cost of labour country, to offer you these services and make a good profit with this –

then clearly it can be done.

True, it is also about size of operations, but not only – it is equally about learning from best practices.

If your core routines – like HR routines, deployment strategies, backup and recovery – are not in order, no doubt you will suffer.

With regards to mail and intranet services, most of the attention and time will go to proper routines, like new users and leaving users – and these core routines will be the same, no matter whether they are being executed on one or the other technical infrastructure. Even if someone runs your server, it is still you who has to know about authorizations and access.

4. Regrets

Insourcing later what you have outsourced now comes at a price

It is all about skills: in case you decide some years down the line to insource services that you have abandoned today, this will become expensive.

You either have to reconnect and rebuild your organisations capacity, or buy people from outside. Even telling what skills you will need might become a challenge.

5. Legislative factors

Most IT legislations somewhat limit the places you may put your data and services.

For example, in EU countries, security standards demand that we keep services in europe or with companies that maintain a legal presence in Europe.

Authorities probably wouldnt be happy if we put out data storage into North Korea – just as an example.

Do you know, what your legislation says about this?

6. Location of services

Speaking of location of data and service – face it: chances are, you will never really be sure where they *really* are.

In a truly clouded environment, physical data may be moved at leisure –

and often, even inside your partner company, people would not necessarily know where servers and CPUs reside.

7. Dependence on upstream conenctivity

Having services outside your local network or country makes you vulnerable for failure of upstream connectivity.

True, if your international links are down, you would not be able to reach your global contacts per mail anyway, but at least your faculty mail and calendar would work.

This is a serious consideration of how dependent on external factors outside your control you like to be.

See for example this interesting post, discussing teh impacty of a major outage of the east african cable rings:

“Why we urgently need offline cloud computing redundancy in Kenya”

http://www.ictworks.org/network/ictworks-network/384

8. Cloud or Central Control

With what we said above, take a look at the picture, wikipedia is using to illustrate the cloud:

http://en.wikipedia.org/wiki/File:Cloud_computing.svg

You might add players to this picture, but the telling fact is, that in todays reality, it is really just a few big ones dominating the market.

We often romanticize the cloud, associating terms like … distribution … sharing … cooperating – but in fact, the cloud might be much more central than we think.

Together with ambitions of part of the internet industry to take all core services away from the not so privileged, as they are not capable of running these securely anyway –

a pretty dark picture emerges, the picture of tightly controlled global IT structures that throw away the initial values of the internet.

Read about some of these considerations here:

http://www.networkworld.com/news/2010/010410-outlook-vision.html

9. Security

This is a bit of draw: while none of the players out there have a clean track record when it comes to security,

To many, the two words “Microsoft” and “Security” combined in one sentence would seem like a bit of an oxymoron.

Microsoft, like other cloud computing players, clearly says that they will not be liable for security problems, e.g. data loss, in their cloud services:

Google has been criticized for its handling of privavy in search results and apps like Buzz.

See e.g. here for part of this discussion:

http://www.eff.org/deeplinks/2009/12/google-ceo-eric-schmidt-dismisses-privacy

http://news.bbc.co.uk/2/hi/10107691.stm

However, it would be arrogant to assume that you will do better yourself.

High security standards can be reached by yourself as well as by external partners.

You are less likely to be hit by random mass scripting attacks if you keep your services in your own backyard,

however, if somebody really really is after you –

they will know where to find you, no matter what.

Often overlooked: a high percentage of potential attacks come from inside your own organization – and in that case, maybe your services are safer if run by an external partner.

10. Terms

Take a close look at contract terms, e.g. notice period for cancellation or change of services.

Many major players, be that software companies or social websites, have changed their terms with short warnings, and often poorly documented.

Take facebook, whose bad practice of continuously changing privacy policies in order to make money out of the data the users gave them now has become a mainstream concern.

There is no guarantee that what you are given for free will stay free forever. It might just be “pusher marketing”: give it away for free until people really like it and cant do without it – then you start charging. Or change the rules.

Uncertainty of rules and conditions together with short periods of notice, e.g. cancellation notice, makes things even worse.

Having to move all your operations with a one month warning is bad news.

Note that these skeptical remarks are by no means assuming bad intent on the side of those who are offering free services today.

11. Pick and choose

Pick carefully what you like and do not like. It is no problem having all your students use google accounts, apps and so forth while keeping your own infrastructure intact. You do not have to be owned in order to be part of a cloud.

You can have your own Zimbra and get the best out of Google Apps – that s the whole idea of “cloud” interoperability

The dont’s: Prohibitive factors

Never outsource services that

- you have not run yourself and fully understood before you outsource them. Doing that will make it impossible to manage, control and evaluate your partners performance. They will be able to sell you stuff you do not need and should not pay for. So, in clear words: run your own mail server first, at least for testing, and once you understand it thoroughly, revisit the question of outsourcing.

- Never outsource services that need to be tightly integrated into your own IT infrastructure. If the doors to your building are controlled by IT services , you better keep those close to your door. It is so frustrating standing in front of a locked door, waiting for a network connection to come back up, or your helpline to answer.

- Never outsource anything that is closely related to your core competence. For a technology and science organisation, IT may or may not be seen as core competence. Personally, i would think that a university that aims to teach people how to build and control infrastructures for global IT also should be able to run such infrastructure. As a student, i would find it hard to trust knowledge by people who have not been in touch with what they are teaching.

- Never outsource anything into a tech lock-in situation – that means, only choose services and technologies for which you can find an alternative supplier if need be. Use Open Source technology, use networks, use open knowledge.

Conclusions

It s impossible to come to a general conclusion regarding cloud and outsourcing – but just for this concrete example, i will offer mine:

If you are a university or educational organization for whom IT infrastructures belong to curriculum and expertise – run your own services.

If your organisation is still small and weak with regards to people resources and skills – you might hear the advice to outsource, because of your “weakness”. I would turn this around: If your organization is young and growing – that s one more reason to run services yourself. To learn, develop and learn how to control processes. Once you have, you can always outsource later – and control that process.

Needless to say, if your core business is teaching graphics design or chemistry or literature – dont bother running your own mail servers. 🙂

Why WiMax (still) is dead

Posted on | February 9, 2010 | No Comments

Recently, we have been asked again about the role of WiMax (802.16) in rural and remote networks.

In brief, we still think it is not a preferred option, and here is why:

- Price: Simply, for what it can do – it is too expensive. Smart use of cheaper technologies, and upcoming improvements of existing standards, see e.g. Ubiqiti’s AirMax technology, can deliver the same performance at lower prices.

- Focus Area: In our view, WiMax’s strength are infrastructure networks in densely populated areas, with massively multipath non-line-of-sight conditions. Where line of sight is free, or just moderately obscured, WIMAX does not connect you any better, neither with respect to bandwidth nor stability, than its cheaper competitors. Where line of sight is seriously blocked for a long haul link – neither WiMax nor WiFi will get you around that.

- Clients: 802.11 clients are ubiquitous in todays’ end user devices – there is hardly a laptop or smart phone that comes without WiFi. WiMax however is absent – it would have to be added as CPE device for each and every user, again bringing up the price for hardware and deployment.

- Competition: For long range infrastructure networks, WIMAX is beaten by WiFi and other, proprietary standards. For highly mobile urban roaming, mobile data (3G etc) wins. So, what market niche is left for WiMax?

These are just a few (simplified) points in an discussion.

No doubt, there will be networks where WIMAX is chosen, and where budgets allow, there is nothing seriously wrong with that.

For low cost rural networks however, and for rural business to make sense – there is better choices.

We will be discussing these issues again at the upcoming ICTP-ITU school on wireless, at ICTP Trieste.

Berkman Center study on global broadband names Open Access as key in broadband success

Posted on | October 16, 2009 | No Comments

The draft report is open for comment here:

http://cyber.law.harvard.edu/newsroom/broadband_review_draft

From the report:

“Our most surprising and significant finding is that ‘open access’ policies—unbundling, bitstream access, collocation requirements, wholesaling, and/or functional separation—are almost universally understood as having played a core role in the first generation transition to broadband [dial-up to broadband] in most of the high performing countries; that they now play a core role in planning for the next generation transition [faster and always available connectivity]; and that the positive impact of such policies is strongly supported by the evidence of the first generation broadband transition.”

Further more:

“We find that in countries where an engaged regulator enforced open access obligations, competitors that entered using these open access facilities provided an important catalyst for the development of robust competition which, in most cases, contributed to strong broadband performance across a range of metrics.”

Nepal Wireless Workshop, September 2009 – Pictures

Posted on | October 16, 2009 | No Comments

252 pictures from the Nepal Wireless Workshop, September 2009, are now available here –

a detailed report soon to be available.

2 Million downloads for the “green book”

Posted on | October 13, 2009 | No Comments

Wireless Networking in the Developing World is a free book about designing, implementing, and maintaining low-cost wireless networks.

Since 2008, WNDW.net has served over two million downloads of the book! WNDW is available for free in seven languages. If you haven’t checked it out already, download a copy for yourself.

« go back — keep looking »